ML4Sci #2: Nowcasting rain forecasts from radar; graph similarities predict zeolite properties; cycle-consistent GANs for climate change visualizations;

Some more musings on slow numerical models and open-access scientific literature

Hi, I’m Charles Yang and I’m sharing (roughly) monthly issues about applications of artificial intelligence and machine learning to scientific and engineering problems. If you’re interested in receiving this newsletter or if you know someone who would, subscribe here.

This newsletter is still in its early days and I’m still figuring out the format and topical coverage that I want to pursue. If you have any feedback or suggestions, including interesting articles, new ideas for how to format these newsletters, length considerations, scope or coverage, or anything else, reach out with your ideas!

⛈Nowcasting rain forecasts from radar

Nowcasting: short-term, high-resolution, low-latency, predictions

Google researchers recently presented at the 2019 NeurIPS conference @Vancouver on nowcasting rain forecasts based on radar images. As anyone who’s been drenched on what allegedly was supposed to be a sunny day knows, weather forecasts still have a way to go. In addition, such work is important for climate prediction, flash flood warning, and renewable energy generation forecasting. Google AI also has a great blog post highlighting this work, that does a terrific job of explaining and visualizing their results(frankly, I’d recommend reading the blog post over the paper). In essence, they use a U-net convolutional neural network architecture to predict the next radar at 0-6 hours in the future, given the past 1 hour of radar measurements.

This work fits into a common theme that I hope to explore more in this newsletter: using machine learning models to replace numerical, physics-based, solvers. Current weather forecast models are extremely compute-intensive, trying to make forecasts by taking into account a vast variety of physical factors at a range of physical length scales. As a result, these numerical models are very slow, very compute intensive, and don’t even work that well! These types of problems are extremely well suited to machine learning: it’s fast, relatively cheap computationally, and is able to learn very complex phenomena simply by pattern-matching from datasets. It fits particularly well into weather forecasting because while we would like computationally cheap methods, we need low-latency solvers, otherwise the weather will have already changed by the time your prediction is ready! In other words, the federal government might be willing to build ever larger supercomputers for better weather forecasts, but a smarter solution is just to use a faster algorithm e.g. deep learning. Tthis overall problem setup is true not just in weather forecasting, but also in optical physics solvers, finite elements, density functional theory/molecular dynamics, etc. As you may have noticed, deep learning is actually on the faster side of the compute curve for scientific problems, a fact that is easy to forget when many other deep learning applications are still trying to squeeze out the last few milliseconds of latency from a neural network for, say, a self-driving car. This is just one example of how scientific applications have different problem criteria and contexts than traditional deep learning research funded by industry.

Graph Similarities drive Zeolite Transformations 🔒 [not open access, see below for preprint]

Background

Zeolites are a class of highly porous crystalline structures, with a variety of applications in catalysis, filtration, and ion-exchange membranes. When synthesizing zeolites, we would like to reduce the use of high temperatures, long synthesis times, and use of organic agents in synthesis procedures. One common method of achieving these objectives is interzeolite transformation i.e. where one zeolite crystal structure is transformed into another zeolite structure. However, it is unclear what mechanistic principle guides interzeolite transformations; while several have been proposed, none clearly explain all observed transformations.

Summary

In this work, the authors (from the material science department at MIT) scrape 70,000 articles and identify 391 experimentally confirmed interzeolite transformations using a “combination of natural-language processing and human supervision” [read: many late nights for some poor graduate students]. Using this dataset, they first establish that current proposed mechanisms (change in framework density(FD) or the presence of common building units(CBU) between pairs of zeolties) do not explain all experimentally known zeolite transformations.

The authors decide to adopt a graph-theoretic approach by searching for the presence of graph isomorphisms between unit cells of different zeolites. In essence, you can consider zeolite crystal structures as balls-and-sticks (atoms-and-bonds) which can then be treated as graphs. Two graphs are isomorphic if there exists a bijection between all vertices i.e. you can map each vertex in one graph to another vertex in another graph and they will all have the same connectivity.

While graph isomorphism is a good starting test for interzeolite transformations, it suffers from the following problems: 1) it is a binary metric, 2) it is computationally intensive for large graphs, 3) there are many possible building block supercells for a given zeolite. To solve the first 2 problems, a continuous, easy-to-compute metric for graph similarity is used called D-measure. To solve the problem of choice of supercell, they simply compared all possible supercells that have the same number of atoms and chose the pair that had the minimum difference in graph similarity (a choice that can be rationalized by minimization of free energy).

They demonstrate that the D-measure is an indicator of interzeolite transformations and provide insight on why different mechanisms of interzeolite transformations correspond to different clusters of D-measure. For instance, interzeolite transformations between isomorphic zeolites correspond to kinetic transformations because there is no net change in bonds. Finally, the authors mine a database of theoretical zeolites and explicitly identify new potential interzeolite pairs based on those that have already been experimentally realized, providing a clear path for experimental testing of this graph-based mechanism.

Commentary

A vastly hidden source of man-hours for this paper would be developing the literature scraping pipeline. In the same way that many experimental breakthroughs are actually the product of many unwritten experimental tricks and hacks, much of the work in gathering, mining, and cleaning the literature corpus are not easy to describe or communicate via a paper. After all, 90% of machine learning is just data cleaning.

In addition to the diversity of scientific paper formats, part of the reason that literature is so difficult to parse is because much of it is behind paywalls. Indeed, researchers are forbidden from open-sourcing their scraped literature corpuses, if any work in the corpus was obtained via a private sharing agreement with a scientific publisher. Until we move toward a more open-source scientific publishing model, such literature data-mining work will continue to be silo’d in a handful of well-connected research groups with special access to publisher API’s. The potential for literature data mining as a method for scientific hypothesis testing will be hobbled until researchers can share and benchmark their models in a similar fashion as is done in the machine learning community.

This work is particularly interesting because of its interdisciplanary nature - graph isomorphisms are well known to any computer science undergraduate, but no CS undergrad could have produced this work. On the other hand, one must wonder how many other small mysteries like interzeolite transformations are waiting to be solved by the simple application of a well-known concept from computer science, or math, or statistics? In any case, we will begin to see more and more graduate students who are training themselves to speak two languages: that of their chosen scientific field of study and data science/programming/computer science/machine learning. And this newsletter is trying to explore that intersection.

P.S. If that described you, I’d love to hear from you about your experience! Feel free to reach out to me at ml4science@gmail.com or on Twitter @charlesxjyang21.

Finally, this work is an interesting example of a new way of doing science. It is not really replacing experimental work; indeed, it uses experimentally verified work as the basis for testing. But what differs is that the authors did not do the experimental work themselves. They developed a scientific hypothesis, scraped experimental results from literature, and then tested their hypothesis by comparing it to the testbed of experimental results. This form of scientific hypothesis testing is perhaps most similar to medical meta-reviews that try to discern the efficacy of a medical treatment by analyzing many clinical trials in different conditions of the same treatment, but it is also different in the toolset it uses, namely, natural language processing (NLP). I don’t see this as a revolutionary change in how we conduct science: after all, Kepler formulated his laws of planetary motion by analyzing the planetary data compiled by Tycho Brahe. NLP is simply a machine-based tool that is able to analyze much larger amounts of data i.e. text, that humans could never efficiently analyze.

Interested in learning more about scientific literature data mining? Check out my first issue of ML4Sci where I discussed how to learn word embeddings to discover new materials.

[arxiv preprint] [code][article-scraper]

Visualizing the Consequences of Climate Change

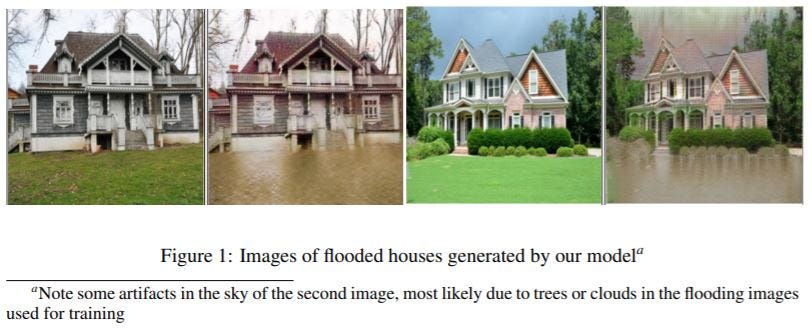

Climate change is one of the most alarming problems of the century. One of its challenges is that it is slow and difficult to feel - we don’t really feel the climate changing over the course of years, even though on a geological scale what is happening to our planet is radically unprecedented. This new work published at the ICLR AI for Social Good workshop uses cycle-consistent GAN’s to transform Google Street View images of houses to show what they would look like flooded due to rising sea levels.

Cycle-consistent GAN’s are a paired system of 2 GAN’s that are trained to transform from one image domain to another image domain e.g. horses to zebras or in this case, flooded houses to not flooded houses. By maintaining invertibility between two image domains, cycle-consistent GAN’s are a good solution to the problem of unpaired image translation. While there has been much discussion around how GAN’s allow anyone to create “deep fakes” that distort reality in extremely convincing ways, this work is a good example of how creating convincing new realities has positive applications e.g. climate change impact awareness (similar to how mirror boxes are used to treat phantom pain). Of course, this raises a whole host of ethical and moral issues, such as who gets to decide what new realities we try to create.

Thank You for Reading!

I hope you’re as excited as I am about the future of machine learning for solving exciting problems in science. You can find the archive of all past issues here and click here to subscribe to the newsletter.

Have any questions, feedback, or suggestions for articles? Contact me at ml4science@gmail.com or on Twitter @charlesxjyang