ML4Sci #31: ML identifies more anti-CRISPR proteins; Transformer protein language models are unsupervised learners

My attempt to dive into some ML4Bio. Also, transformers are eating the world

Hi, I’m Charles Yang and I’m sharing (roughly) weekly issues about applications of artificial intelligence and machine learning to problems of interest for scientists and engineers.

If you enjoy reading ML4Sci, send us a ❤️. Or forward it to someone who you think might enjoy it!

As COVID-19 continues to spread, let’s all do our part to help protect those who are most vulnerable to this epidemic. Wash your hands frequently (maybe after reading this?), wear a mask, check in on someone (potentially virtually), and continue to practice social distancing.

ML approach expands the repertoire of anti-CRISPR protein families

Published July 29, 2020

CRISPR is a novel gene-editing technology that has the ability to give us high-fidelity over editing and writing to the underlying genetic code. With such a powerful tool, it is also important to be able to modulate, control, and inhibit it, which is usually done with anti-CRISPR proteins (Acrs). The question then is, how do we find Acrs out in the wild? This paper uses a random forest ensemble of extra-random trees from sklearn. The training dataset is generated from a set of (n=1000) known Acrs and a random set (n=4000) of proteins (since most proteins are not Acrs). They demonstrate their model performs well on a new dataset of Acrs and use their trained model to search through 182M proteins and selectively filter out candidates using a set of heuristics. They finally experimentally validate the top model predictions and show the discovery of several new Acrs.

What struck me most about this paper is the extraordinary depth of domain knowledge required. This paper is not a “fit-randomforest-to-dataset” approach: they use a complex set of heuristics for filtering and well-established techniques for protein comparisons, coupled with massive libraries of biological information. And then there’s the experimental validation. The ML part is one small, but still plausibly important, part of this pipeline for finding needles in haystacks.

Transformer protein language models are unsupervised structure learners

Published December 15, 2020

A collaboration between Harvard, NYU, and Facebook AI researchers

Protein contact prediction, which relates the distance between the residues in a protein, is intimately related to understanding the structure of a protein and just like in materials, the structure dictates property. In this work, authors use transformers, an increasingly ubiquitous model imbued with self-attention, to do unsupervised learning on protein sequences. In this context, given a corpus of protein sequences, they train a transformer to predict the next amino acid in the sequence. Then, the trained attention-heads of the transformer are used as features for a simple logistic regression model to do protein contact prediction - unsupervised approach beats other models at contact prediction.

Several important trends are illustrated in this paper:

transformers are becoming the favored model architecture in NLP-like tasks, but also elsewhere

unsupervised sequence prediction with deep neural network architectures can capture latent features that other methods struggle to distill. See ML4Sci #1 unsupervised word embeddings for material discovery

Why might Facebook AI Research be interested in this work? (also see below for more on what FAIR’s been up to in the ML4Bio space)

Department of Machine Learning

From the PapersWithCode newsletter: transformers are eating the world!

[FacebookAI] continues their advance into medical AI - this time using X-rays to run COVID-19 prognosis and triaging. This collaboration between FB and NYU previously demonstrated the ability to accelerate MRI scans by a factor of 4

♛"Introducing Maia - a human-like neural network chess engine”. Chess engines, which are far better than human players at this point, have the problem that they often make extremely unintuive and frankly weird-looking moves. This chess engine learned to make “human-like” moves, which helps us better understsand the model and also see characteristic human-type mistakes at different levels.

From Dropbox: how ML saves us $1.7M a year on document previews (and they don’t use a neural network). Another nice example of the difficulties and challenges with operationalizing ML on large-scale streamed data.

What Google’s been up to:

[GoogleAI] Looking back at all that Google Research has done in 2020 - COVID-19, ML4Weather, Robotics, and more (they could honestly be their own CS department)

A nice youtube lecture from Google researchers on chip optimization and device placement, which I briefly mentioned in ML4Sci #10

[VentureBeat] Google trains a trillion parameter AI language model using a novel Switch Transformer architecture. VentureBeat does a balanced coverage of technical detail but also highlights the biases in large scale language models and ethical concerns of large tech companies building these models that may encode discrimination.

Near-Future Science

📖 An open-source git textbook: “Deep Learning for Molecules and Materials” by Andrew White, Chemical Engineering Professor at University of Rochester

💊[ACS] “Is drug repurposing worth the effort?” A critical look at drug repurposing efforts for COVID-19. The more I learn about biology, the more I’ve realized it is a field full of difficult problems that require careful domain knowledge and cautious respect, and not the casual confidence of someone who knows how to fit AI models to a dataset

[Youtube]Using Neural Networks for learning physical dynamical systems:

🏫[Youtube] Deep Learning for Science School by NERSC playlist

The Science of Science

[Medium] How to Get started in Materials Informatics [h/t Nathan Frey]

[ScienceMag] “Disgraced COVID-19 studies are still routinely cited”

Reflections on 2020 as an Independent Researcher - an insightful analysis into the shortcomings of the structured scientific enterprise and how the “passion economy” may enable more independent researchers in the future

💻[NatureNews] “Ten computer codes that transformed science” including Fortran, Arxiv, Jupyter Notebook, AlexNet

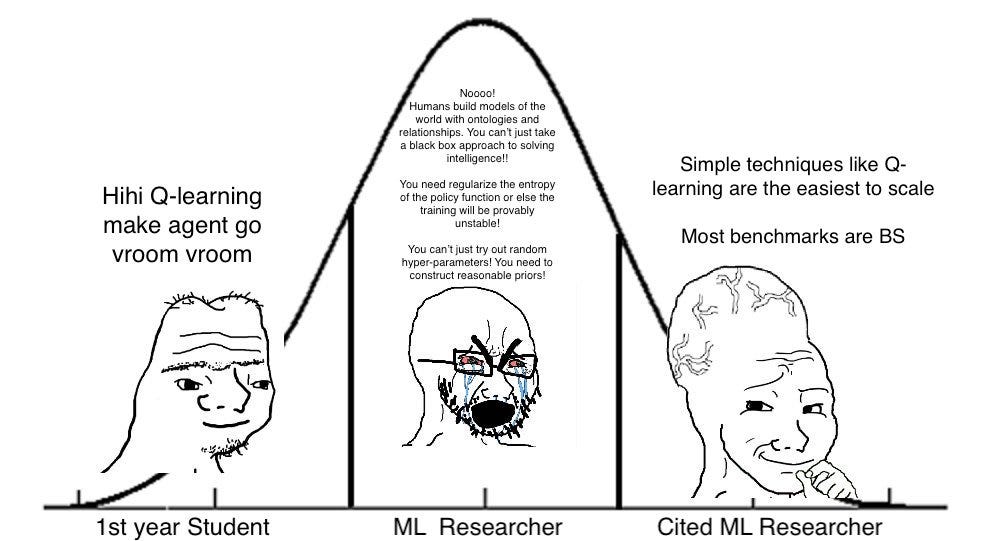

“Statistical Modeling: The Two Cultures” by Leo Breiman, inventor of the Random Forest. Written in 2001, Breiman decries how statisticians look down on black-box empirical models, such as decision trees and neural nets. How the turned tables indeed

🌎Out in the World of Tech

📜Axios, a news organization, releases a Bill of Rights pledging no AI-written stories ever

📈[Algorithmia] Trends in Enterprise ML - another one of those great slide-decks hidden on the interwebs. In summary: even more companies are trying to do ML , it’s hard, and DevOps is a growing ML industry

[NYT] Facial recognition technology continues to lead to wrongful arrests of African Americans

[Wired] Palantir’s facial recognition system in Afghanistan

🧠🎮From 2018: Facebook open-sources Horizon, a reinforcement-learning algorithm designed for production-scale deployment. In the paper, they showcase their model’s performance on sending relevant Facebook push notifications. Ironically, this algorithm is closely related to the same one Google used to beat Atari games…except this time, we are the game

Policy and Regulation

🏛️From the Biden White House: “Memorandum on Restoring Trust in Government Through Scientific Integrity and Evidence-Based Policymaking” and a [ScienceMag] review of the executive order

Thanks for Reading!

I hope you’re as excited as I am about the future of machine learning for solving exciting problems in science. You can find the archive of all past issues here and click here to subscribe to the newsletter.

Have any questions, feedback, or suggestions for articles? Contact me at ml4science@gmail.com or on Twitter @charlesxjyang

What a great read!! Keep up the good work :)