ML4Sci #37: DeepMind’s Annus Mirabilis

Large AI for Science models are the new digital particle accelerators

Hi, I’m Charles Yang and I’m sharing (roughly) monthly issues about applications of artificial intelligence and machine learning to problems of interest for scientists and engineers.

Hello friends,

It has been awhile - apologies for the delay in newsletters! I’ve found myself unexpectedly busy the past 6 months, doing a summer stint at the Department of Energy’s ARPA-E, starting a new job as an ML Engineer at a stealth startup, and doing climate consulting with Actuate Innovation (the best tagline description I have so far for this small organization of ex-DARPA leaders is “DARPA but with non-profit financing”). I probably won’t be sending posts as frequently or regularly as I used to, but I had a few thoughts floating around over winter break that I wanted to share. All views here are still my own.

Year-in-reviews always seemed like an appropriate mix of vogue and cliche, so I figured I’d take my stab at a slightly late two-part series.

DeepMind’s Annus Mirabilis:

Google’s DeepMind spent 2021 continuing to push out ground-breaking work applying AI to a variety of important scientific problems.

This is perhaps one of the best groups to watch for Manhattan-style AI projects. Unrestrained by the incentives and limits of scientific academia (publish or perish, tenuous and short-term federal funding, high labor turnover i.e. grad students), DeepMind is quickly becoming the Bell Labs for AI. In particular, following DeepMind is a good way to glimpse the future possibilities of AI applications in scientific domains. Here are, in my opinion, their 3 most impactful projects this year.

Foremost was AlphaFold v2, a newer update of the original model announced in late 2020. This year, DeepMind open-sourced the AlphaFold’s model code and provided 350,000 protein predictions for biologists around the world to use. Currently, the vast majority of protein structures have not been experimentally measured. These AI-produced images of protein structures help fill in the gap and provide solid baselines for biologists to work off of and produce new theories for mechanisms.

DeepMind also produced significant steps forward in Density Functional Theory (DFT), using an AI model to error-correct DFT’s electronic structure predictions. Their model produces strong results a variety of molecules, including hydrogen chains, transition states, and DNA structures. DFT calculations are often used to validate proposed mechanisms for experimental results or as a data-generator for training chemical data-driven discovery models. Given DFT’s importance as a theoretical tool for experimentalists and as a computational substrate for generating large-scale datasets like those found in the Materials Project, DeepMind’s improvement on DFT with deep learning serves as an important step forward in guaranteeing the accuracy of DFT predictions and correcting its known systematic failures.

Finally, DeepMind partnered with several pure mathematicians to formulate novel conjectures in the fields of topology and representation theory. It is perhaps in this paper where we get the clear picture of future AI and scientist, or in this case mathematician, collaboration. This AI model (with its highly paid DeepMind trainers and decoders) sifts through impossibly vast dimensional spaces and elucidates relationships that would be otherwise hidden, helping provide more concrete answers to support mathematicians conjectures about abstract groups.

Each of these accomplishments is impressive on its own. That one organization focused on AI can build such break new ground in so many scientific domains is a testament to the potential of AI to alter the scientific discovery landscape. Some general observations to make about AI for Science systems:

🔬AI as a fuzzy but powerful microscope: AI data-driven predictions of the physical world provide us an estimate, a hazy mirage, of complex systems we could not otherwise simulate or measure. We see through a mirror dimly, but AI can sharpen the image for us (sometimes literally!). In this sense, they are a powerful new tool in the scientist’s toolkit to guide intuition and identify areas of interest to focus time and experiments on.

📉 AI has a unique CapEx/OpEx tradeoff: these massive AI models often require large amounts of scientific data and compute. For instance, AlphaFold required 128 TPU cores for 10 days. There are very few places in the world with the compute infrastructure and human expertise that could reproduce this result. However, once the model is trained, these model backbones can be open-sourced and served up as a web-request API. In other words, the upfront capital, compute, and labor is intensive, but afterwards, the model backbones can be deployed globally and fine-tuned locally.

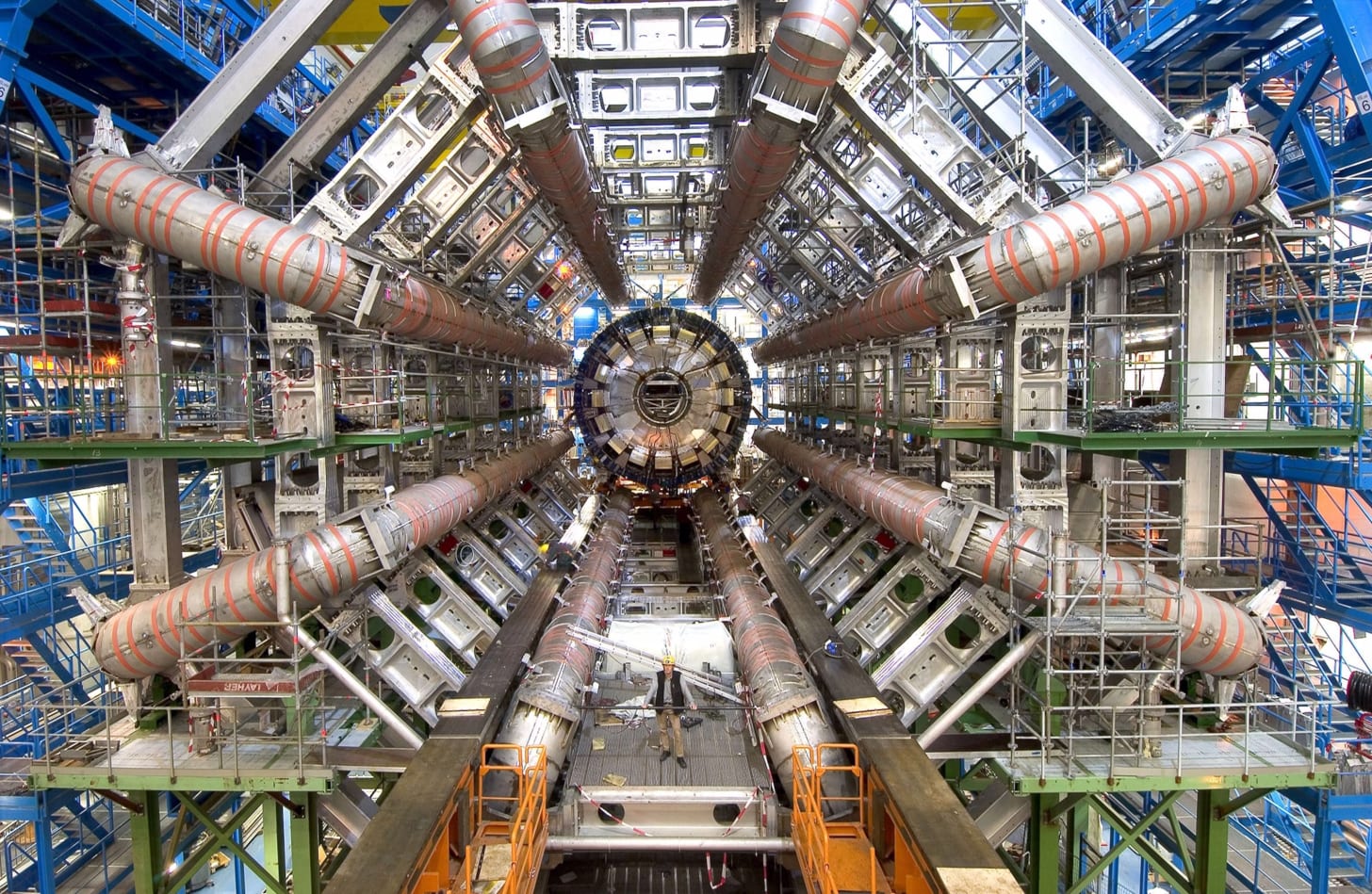

These two observations lend themselves to viewing AI4Science projects as digital large particle accelerators. The metaphor of the particle accelerator captures the enormous breadth of infrastructure, talent and capital required to build these models. The metaphor of the particle collider also captures the unique ability of AI to peer a little deeper and a little farther before, parsing through the fog of data to reveal novel discovery.

But, somewhat unlike real world particle colliders, because these are digital technologies, once built (in the cloud), they can also be accessible through any individuals laptop. It’s like if once you built Large Hadron Collider, anyone with a laptop connection can run any experiment they want using the LHC, simultaneously.

One trend that cuts against this metaphor of AI as particle colliders is that cloud accessibility and increasingly higher-level software for training AI models will allow anyone with sufficient capital to abstract away all the hyperscaling and data management required to train large-scale AI models. However, I think for the near forseeable future, large AI4Science models will still require (and be a lot cheaper) with on-prem hardware and require significant labor and talent to build out the training infrastructure.

None of this analogy is meant to lessen the potential laptop-scale AI models have for providing new insight and novelty in scientific experiments for different projects. But my hypothesis is that the breakthrough, field-advancing AI models for scientific fields will be more analogous to a particle accelerator than a lab beaker scale.

The question to ask this year is: who is going to be funding and building these digital particle accelerators?

On that note: if you’re interested in contributing to AI4Science outside of the constraints of academia, check out this new effort from Pasteur-ISI to simulate science using AI. A public-benefit, VC-funded startup, they’re hiring for Applied ML & Simulation Engineering, Infrastructure Engineering, Research Fellow / Resident. Not sure what exactly they’re up to but they seem to be one group that is finally trying to capitalize and scale all the benefits of AI for science outside of large corporate R&D labs and academia. (see this arxiv preprint from founder Alexander Lavin and et. al. for some insight into their approach)

Thanks for Reading!

I hope you’re as excited as I am about the future of machine learning for solving exciting problems in science. You can find the archive of all past issues here and click here to subscribe to the newsletter.

Have any questions, feedback, or suggestions for articles? Contact me at ml4science@gmail.com or on Twitter @charlesxjyang