ML4Sci #27: Some theses about ML4Sci

Also, "repairing innovation" and Semantic Scholar Recommendation Feeds

Hi, I’m Charles Yang and I’m sharing (roughly) weekly issues about applications of artificial intelligence and machine learning to problems of interest for scientists and engineers.

If you enjoy reading ML4Sci, send us a ❤️. Or forward it to someone who you think might enjoy it!

As COVID-19 continues to spread, let’s all do our part to help protect those who are most vulnerable to this epidemic. Wash your hands frequently (maybe after reading this?), wear a mask, check in on someone (potentially virtually), and continue to practice social distancing.

We’ve had a lot more people join this ML4Sci newsletter community since I last wrote - welcome to all! I figured now is a good time to collect and write out a lot of the hypotheses/obseved trends that have been bouncing around the (now 27!) issues of this newsletter.

1. Intersections are Unique

This newsletter lives at the intersection of machine learning and the sciences. The field of machine learning has historically been driven by commercial tech interests (see #3) and as a result, the tools, techniques, and culture have been, for better or worse, driven by such interests. However, in the sciences, we work with a different set of problems and constraints. The unique task of building new computational tools for a different set of criteria and datasets will require both a complementary understanding of the scientific domain and the deluge of novel computational techniques that we know call “machine learning”.

In other words, ML4Sci is not simply taking what the ML community builds and calling model.fit(). It will require new tools and techniques that are subtly, but importantly different from their predecessors. Building these tools will require a nuanced understanding of domain problems and a deep knowledge of ML techniques. The whole endeavor of science will be changed, but only through a careful analysis of the unique forces fomenting at this intersection will we be able to understand the broader implications for science as a whole.

See more:

2. A whole-of-science perspective on ML4Sci yields unique insights

One could easily argue that this newsletter, which purports to cover machine learning application for all of science, suffers from a scope problem. Why not write a newsletter about ML for chemistry? or protein folding? or astrophysics? After all, each of those fields are rich with their own nuances and unique problems - how can such a newsletter possibly hope to generalize?

I would argue that there is a distinct advantage to studying multiple different fields of science and how they adopt ML. When we look at things from a whole-of-science perspective, we can separate out the specious field-specific cases from broader trends.

You can also learn valuable lessons from different communities. For instance, the astrophysics and genomics communities have long been familiar with computational modelling methods and have a mature base of folks who do this as their entire research scope. By understanding these fields, we can learn insight for other fields that are only now maturing into software-driven science.

Finally, the unique intersection of ML and the Sciences gives us an opportunity to learn from the ML community as well. Computer scientists have usually led disruptions in academic workflows, particularly around new and improved software platforms. See #4 for one example of this.

See more:

seeing graph neural networks everywhere: ML4Sci #18: using graph neural networks for understanding glassy materials and buildings

3. ML4Sci is disproportionately dominated by tech companies, with unknown consequences

Because many large tech companies have amassed storehouses of top-notch AI talent, they also have a strong grip over ML4Sci, particularly in fields that are ripe for commercialization e.g. AI for healthcare, genomics, agriculture. While private-public research enterprises have always been a good regularizer to ensure research is solving the right problems, it could be argued that early privatization of such an important field and set of techniques may have negative effects on say, open-sourcing results and broader innovation. And in the same way that early AI technologies were focused on the problems scoped out by tech companies, there is a danger that the ML4Sci community will build itself up around a pillar of early, commercializable problems at the expense of more fundamental research. I don’t have a clear opinion or argument for this, except to say that heavy privatization of such an important field, along with a confluence of other societal trends(tech monopolies, social media platforms, AI geopolitics, and free speech) make this an important dimension of ML4Sci to keep in mind.

See more:

4. We need new ways of communicating and organizing science (like this newsletter!), and improved incentive structures

As mentioned in #2, we can often learn valuable lessons from the ML community, who have become early-adopters of different software platforms and are currently experimenting with new and different forms of peer review. The exponential rise in ML papers is mirroring a similar trend in the broader enterprise of science. In order to help scientists manage the flood of information (potentially with AI), we need to think about better ways of managing peer review. There is no reason why research needs to be exclusively done in-person, over-email, or through dense peer-reviewed journal articles.

Loosely speaking, we have low-level (peer-reviewed articles) and high-level (pop-sci articles) communication channels, but there is a missing abstraction level that is currently filled by blogs posts and slide decks strewn across the internet. I’ve found that the best of these are often are extremely useful to practioners (and me), who need review style papers that aggregate useful trends and identify critical papers, without the academic jargon. If compression is learning, then we clearly are missing people who are paid and invested in efficiently compressing progress in a field and can communicate this clearly to others.

Finally, changes in how we do science will require new structural organizations and reformed incentive structures. To identify these valuable resources, we need better ways to find them (separate the wheat from the chaffe, as it were, a notoriously difficult task online), and reward people for creating these resources. Organizing and disseminating information via digital channels (Twitter, Slack, Reddit, Medium, Substack) is also a growing enterprise, though not without its own pitfalls.

This newsletter exists on the hypothesis that there is an unfilled appetite for this kind of systematic analysis written in accessible, yet technical prose. I’m also still thinking of different ways to help build out this community - shoot me any ideas/proposals you have!

See more:

ML4Sci #12: Thoughts on COVID-19, Scientific Gatekeeping, and Substack Newsletters

ML4Sci #23 - “The Science of Science” (mass publishing, advisor abuse, PhD Grind)

📰In the News

ML

Two kinds of stories: MIT Tech Review interviews Geoff Hinton, who claims “Deep Learning is going to be able to do everything”. Meanwhile, the National Geospatial-Intelligence Agency (NGA) is offering a cash prize to teams that can “identify locations from audio and video data”[competition].

I always get annoyed when the most meaningful thing people say about AI is “it will do everything”. Like ok maybe you’re right but, that doesn’t actually really tell us anything useful. The latter example is much more interesting and insightful - I certainly would not have thought of that on my own!

💬“We don’t speak the same language: interpreting polarization through machine translation” - super interesting NLP analysis of how different political groups “speak” different languages and hints at how statistical analysis of language corpuses might be able to quantify broader trends.

“Cybersecurity attacks against ML systems are more common than you think” - joint work between Microsoft and government contractor MITRE

🏥A great case study from Duke using AI for sepsis detection in a hospital system: “Repairing Innovation: A study of Integrating AI into Clinical Care”. In particular, they examine AI integration as a sociotechnical problem and argue that disruptive innovation requires “repairs” to the system, to create new protocols, structures, and culture

From Google: “Underspecification Presents Challenges for Credibility in Modern Machine Learning”. A review of the difficulties encountered in applying ML to the real-world @Google (and a nice follow-up to the thread of articles about ML DevOps from the past several issues). The challenge of integrating AI systems into our actual daily-life systems is the next great hurdle for AI adoption - ML4Sci will have its own unique challenges as well (e.g. distribution shifts from different spectroscopic calibrations or failing to generalize on newer hardware).

👏MadeWithML pivots to “end the hype and deliver actual value” - an impressive move to build read ML products and tutorials, instead of “build a CNN in keras in 10 lines of code”.

[TheGradient] “Interpretability in Machine Learning” - a nice overview

One of those great slidedecks I mentioned in hypothesis #4: a comprehensive slide deck of recent NLP advances. A tutorial from the EMNLP conference, presented by researchers from at Univ. of Washington and Google .

Science

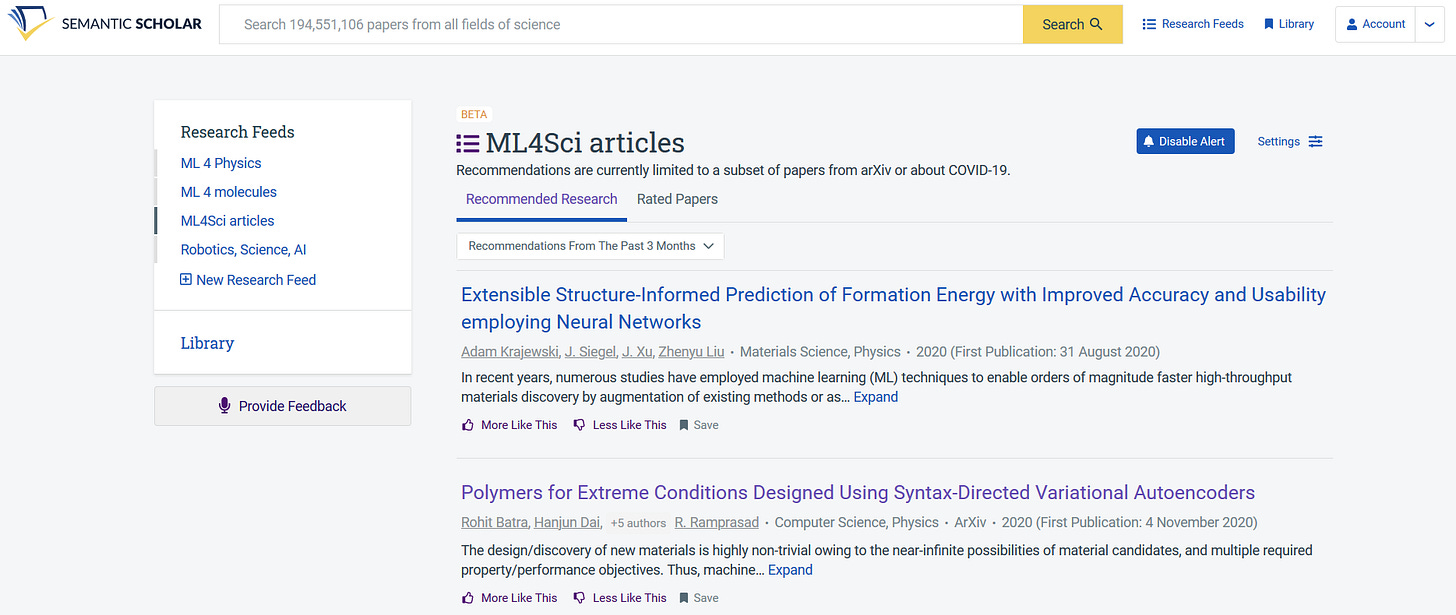

The Allen AI Institute has a scientific literature research tool called Semantic Scholar, which has a super cool feature of essentially building an article recommendation feed based on articles you “liked”. Naturally, I plugged every ML4Sci article I’ve ever written about in and made my own ML4Sci recommendation feed (though I’d recommend probably doing more specific topics, this was just an experiment). I’ve already submitted a request to Semantic Scholar to have a feature where these feeds can be made public, like Twitter lists, but unfortunately such a feature does not yet exist. In any case, here’s a sample of the recommendations from this week:

“Polymers for Extreme Conditions Designed Using Syntax-Directed Variational Autoencoders”

“Graph Neural Network Architecture Search for Molecular Property Prediction” apparently Neural Architecture Search gives you better performance than naive architectures for molecular property prediction!

“Direct prediction of phonon density of states with Euclidean neural network”

Euclidean neural networks are NN’s that have built in equivariances by design

This tool is a nice demonstration of better science literature architecture (though I do wonder how such “social-media” style tools might suffer from the same biases as academic twitter or social media as a whole). They also provide much better analysis of a researchers profile than Google Scholar

The Science of Science

“A narrowing of AI research?”[h/t Charlie You] - topic modelling of arxiv papers on AI suggest that AI research is zooming in too quickly on certain hot topics without enough diversity of exploration. The authors also correlate this with a huge spike in industry funding and engagement in the past decade

🪐A couple issues ago, I shared a NYT article about hints of life on Venus. Now, that work has been cast into doubt, due to the way the original authors removed noise and model the signals (they used….a 12th order polynomial). The steady progress of peer review indeed

[NatureNews] “How DIY technologies are democratizing science”. One could argue that AI is also a “DIY” technology that is open to others

[NYT] A riveting account of the race to develop a COVID-19 Vaccine and Operation Warp Speed

🌎Out in the World of Tech

🤖Researchers at UC Census examine automation in the US

🇨🇳 [WSJ] Jack Ma’s Ant IPO, China’s equivalent of Amazon, was “personally scuttled” by Xi Jinping. Well, it is certainly a different approach to tech regulation

Policy and Regulation

[NPR] Tech policy in the Biden White House

[Slate] “A Court Ruling in Austria Could Censor the Internet Worldwide” - what happens when international platforms have conflicting national regulations?

Thanks for Reading!

I hope you’re as excited as I am about the future of machine learning for solving exciting problems in science. You can find the archive of all past issues here and click here to subscribe to the newsletter.

Have any questions, feedback, or suggestions for articles? Contact me at ml4science@gmail.com or on Twitter @charlesxjyang